Altman’s “Gigawatt a Week” and The Capital Challenge Ahead for AI

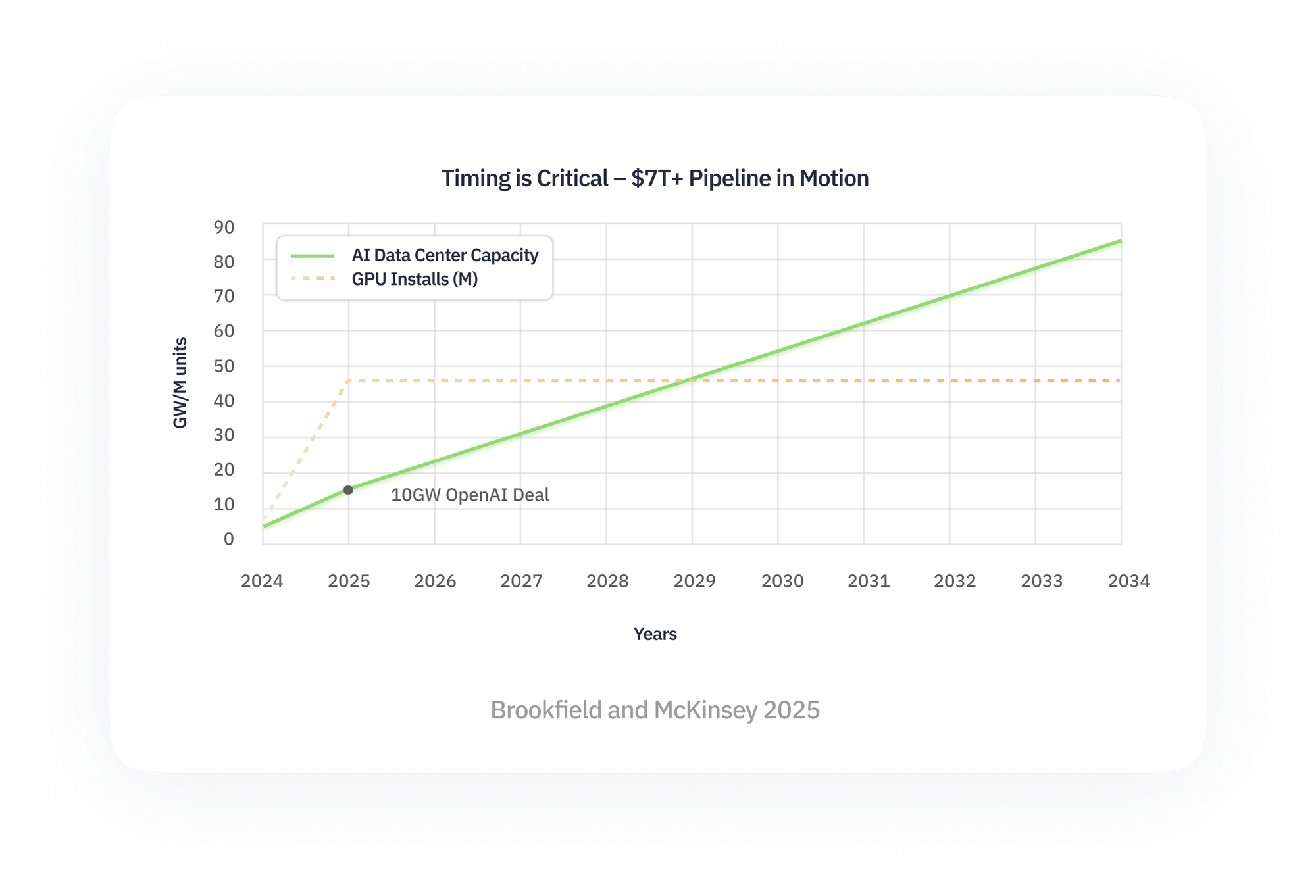

Sam Altman, CEO of OpenAI, published a blog recently outlining his vision for AI infrastructure at a scale rarely seen outside of energy or national defense. His rallying cry: a gigawatt of compute a week.

To put that into perspective, one gigawatt is roughly the output of a nuclear power plant; enough to power 750,000 homes. Altman isn’t measuring AI capacity in teraflops or GPU count. He’s framing it in the language of energy production because AI now expands only as quickly as new power is brought online.

This vision requires a level of coordination across chips, power, construction, and capital markets that dwarfs the traditional approach of a hyperscaler writing a large check and adding capacity incrementally.

Altman also hinted at financing. He wrote that later this year, OpenAI will explore “interesting new ideas” for funding this buildout. Reading between the lines, that means tapping capital sources beyond Microsoft’s balance sheet. Projects at gigawatt scale need blended financing: sovereign funds, government subsidies, corporate partnerships, and eventually, securitized products that spread exposure across investors rather than concentrating it in one institution.

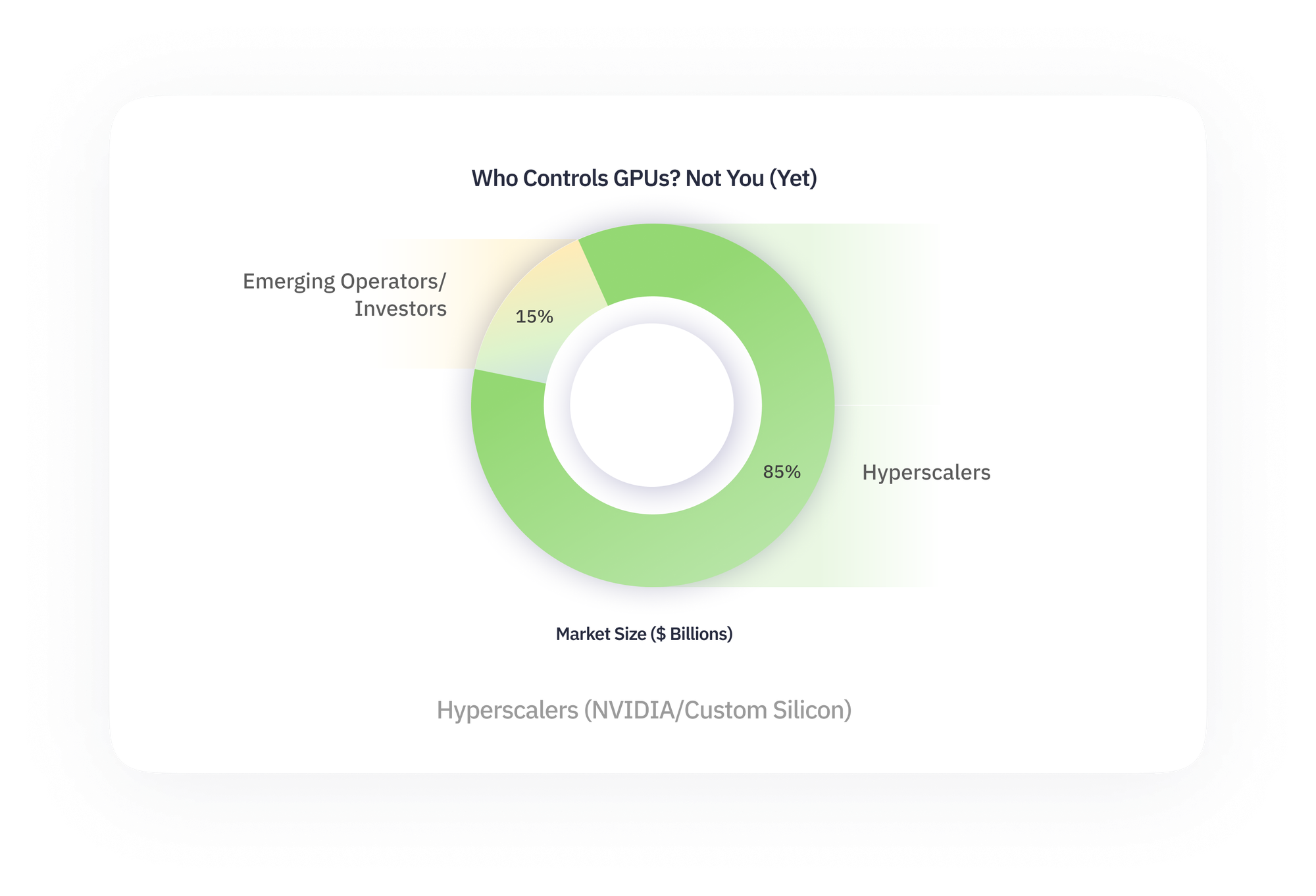

For now, the balance of power remains heavily skewed. Roughly 85% of the world’s GPUs sit inside hyperscalers — companies with both the capital and control to dictate supply. The remaining share belongs to emerging operators and investors experimenting with new models of access. That concentration isn’t sustainable as AI demand outpaces the ability of even the largest firms to self-finance growth.

Why does this matter? Because it confirms that the financial architecture of AI infrastructure is in need of rapid change. The first wave of AI buildout was financed like software: equity, cloud contracts, venture debt. The next wave will look more like energy and utilities: long-dated contracts, revenue-sharing, and structured instruments that tie payouts directly to usage.

For operators, this underscores that scale is no longer judged only by the number of GPUs purchased but by energized megawatts and proven utilization. Capital will follow utilization because investors want evidence of productive assets, not just purchase orders. That principle is already visible in leaseback deals like Nvidia–Lambda ($1.5B for 18,000 GPUs).

For investors, Altman’s metaphor reframes compute as a new asset class — the core of Compute Labs’ thesis. Once an asset class enters the same category as energy, it demands financial instruments that can manage depreciation, hedge volatility, and stabilize cash flows. Tokenization, securitization, and treasury-style models are all potential solutions.

At Compute Labs, we see this as validation of our mission: building the financial layer for AI infrastructure as an emerging asset class. By creating GPU structured products, we allow capital to flow into the very bottleneck Altman has put at the center of the conversation.

Learn more about how we’re structuring GPU investments.